OpenAI has just released GPT-5.2, its most advanced model to date for professional work and long-running AI agents. It is a meaningful milestone, not because it wins another benchmark, but because it moves AI closer to something contact centers have been waiting for: systems that can reason, act, and follow through.

For customer operations leaders, the question is no longer can AI respond to customers?

The question is can AI actually resolve customer issues end to end, safely and at scale?

OpenAI claims that GPT-5.2 brings us closer to that reality.

What GPT-5.2 actually changes

1) It’s not just “better at answers”, it’s better at reasoning

In customer operations, the hard part is rarely writing the response. The hard part is to reason if this makes sense for the customer.

Check the account. Look up the order. Verify policy. Trigger a refund. Update the CRM. Summarize the outcome. Escalate when needed.

2) Professional performance is going up fast

OpenAI’s GDPval evaluation is designed to measure “economically valuable” work across 44 occupations.

Reporting on the launch highlights that GPT-5.2 posts a big jump on this style of professional output, including creating structured artifacts and work products.

3) Pricing and model choice is now part of the design

OpenAI is putting GPT-5.2 clearly into the “best for coding and agentic tasks” slot on its model comparison pages, with published pricing for usage-based deployments.

That’s a signal that teams should stop thinking “one model for everything” and start thinking in tiers, where the system chooses the right capability level at the right time.

This is exactly the direction the industry is moving.

But GPT-5.2 doesn’t change the fundamental reality

Even the best frontier model is still just one component in a production contact center.

Enterprises still need:

- consistent behavior across channels

- the reality of hallucinations

- integration into CRMs, ticketing, billing, and knowledge systems

- governance, compliance, and auditability

- cost control across high-volume workloads

- reliability mechanisms when confidence drops

The future is not “pick the best model and run with it”.

The future is Hybrid AI, where multiple models, tools, retrieval, and deterministic systems work together, orchestrated intelligently. Where you combine deterministic logic with the power of LLMs to generate zero hallucinations.

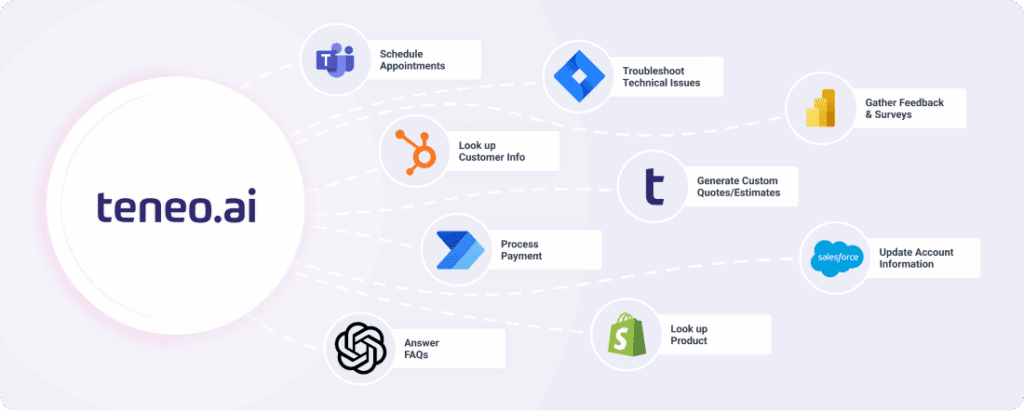

Teneo.ai: the Hybrid AI orchestrator

This is where Teneo.ai becomes the missing layer between GPT-5.2’s raw capability and real-world deployment.

Teneo lets you combine deterministic NLU with probabilistic LLMs, so the core understanding and routing stay stable and governed while LLMs add flexibility. This Hybrid AI approach keeps what the system can do explicitly defined in your flows and rules, and underpins accuracy levels around 99 percent with 90 percent total call understanding in real deployments.

Core understanding and routing are deterministic and explainable

Teneo lets you combine deterministic NLU with probabilistic LLMs, so the core understanding and routing stay stable and governed while LLMs add flexibility. This Hybrid AI approach keeps what the system can do explicitly defined in your flows and rules, and underpins accuracy levels around 99 percent with 90 percent total call understanding in real deployments.

Context is a first class concept

Teneo keeps state across turns, sessions and channels. Variables, memory and long running dialogs are handled inside the platform rather than reconstructed ad hoc through prompts. Callers can come back hours later and continue right where they left off.

Hybrid control in a single conversation

Blend AI and rules in one place. Let LLMs handle flexible language while deterministic steps keep key actions predictable, auditable and compliant. With Teneo you can plug in and switch between multiple LLM providers, using the right model at the right moment without rebuilding your automation.

LLMs run inside guardrails and across providers

In Teneo’s Hybrid AI model, LLMs are invoked only where they add value and are always constrained. You can route different parts of a conversation to different LLMs or vendors, combine their output with Teneo’s deterministic NLU and flows, and still ensure that no model can override business rules, invent actions or act outside permitted scopes.

Full traceability

Every intent match, branch, variable change, API call and backend action is visible. Audit logging and detailed traces let you see why the system behaved a certain way and which information it relied on, which is essential for compliance, debugging and tuning.

Real system integration

Teneo is designed to complete work, not just answer questions. It reads and takes action across CRMs, billing systems, order management, identity platforms, and event driven backend, so AI agents can resolve issues end to end. All while compliant with every relevant regulation.

Handles complex, multi step conversations that take actions for you

Teneo orchestrates voice and digital automation across CRMs, billing, internal systems, order management, identity, and more so the AI can execute tasks, not just talk about them.

Reuses the same AI agents across voice, chat, messaging and web

The same agents, intents, flows and policies drive every channel, no need to rebuild, which is how customers reach around 90 percent total call understanding and 7 million monthly interactions handled by Teneo AI Agents in 42 languages.

Drag and drop, team friendly studio

Teneo gives customer support teams, conversation designers, and engineers a shared environment for collaboration, with visual flows, reusable building blocks, and best practices built in.

Enterprises use Teneo to automate large parts of their contact center while keeping tight control over logic, data and governance.

So you can use GPT-5.2 aggressively, but safely, with outputs that are grounded in your business reality and designed to minimize hallucinations in production workflows.

Want to see GPT-5.2 in a real contact center stack?

If you are exploring GPT-5.2 for customer support, the smartest move is not just adopting the model. It’s adopting the orchestration layer that makes it reliable at scale.

That’s what we build at Teneo.ai. Contact us to learn more!